Getting set up

If there is one realisation in life, it is the fact that you will never have enough CPU or RAM available for your analytics. Luckily for us, cloud computing is becoming cheaper and cheaper each year. One of the more established providers of cloud services is AWS. If you don’t know yet, they provide a free, yes free, option. Their t2.micro instance is a 1 CPU, 500MB machine, which doesn’t sound like much, but I am running a Rstudio and Docker instance on one of these for a small project.

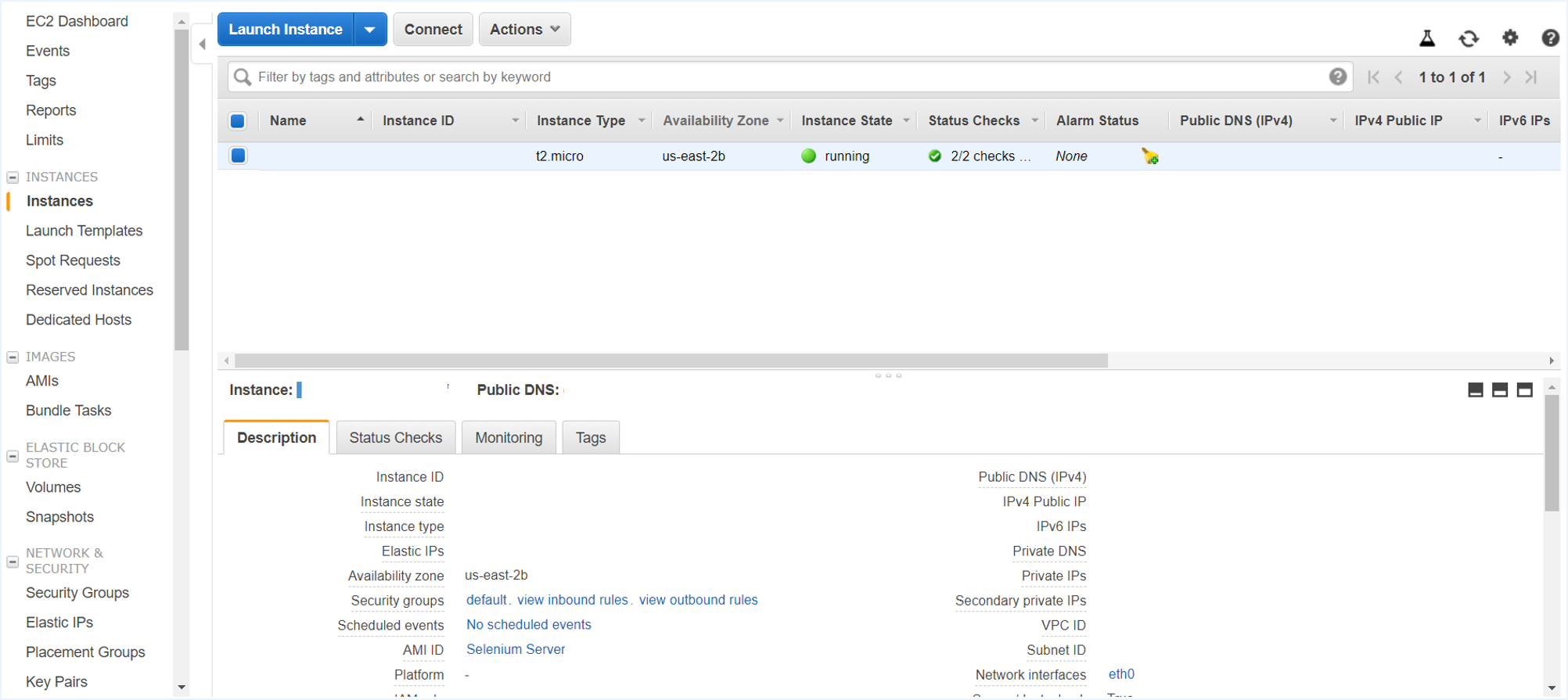

The management console has the following interface:

So, how cool would it be if you could start up one of these instances from R? Well, with the cloudyr project it makes R a lot better at interacting with cloud based computing infrastructure. With this in mind, I have been playing with the aws.ec2 package which is a simple client package for the Amazon Web Services (‘AWS’) Elastic Cloud Compute EC2API. There is some irritating setup that has to be done, so if you want to use this package, you need to follow the instructions on the github page to create AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_DEFAULT_REGION parameters in the ENV. But once you have figured out this step, the fun starts.

I always enjoy getting the development version of a package, so I am going to install the package straight from github:

devtools::install_github("cloudyr/aws.ec2")

Next we are going to use a Amazon Machine Images (AMI) which is a pre-build image that already contains all the necessary installations such as R and RStudio. You can build your own AMI and I suggest you build your own if you comfortable with Linux CLI.

Release the beast

library(aws.ec2)

# Describe the AMI (from: http://www.louisaslett.com/RStudio_AMI/)

aws.signature::locate_credentials()

image <- "ami-3b0c205e"

describe_images(image)

In the code snippet above you will notice I call a function aws.signature::locate_credentials(). I use this function to confirm my credentials. You will need to populate your own credentials after creating a user profile on IAM management console and have generated an ACCESS_KEY for the use of the API. My preferred method of implementing the credentials, is to add the information to the environment using usethis::edit_r_environ().

Here is my (fake) .Renviron:

AWS_ACCESS_KEY_ID=F8D6E9131F0E0CE508126

AWS_SECRET_ACCESS_KEY=AAK53148eb87db04754+f1f2c8b8cae222a2

AWS_DEFAULT_REGION=us-east-2

Now we are almost ready to test out the package and its functions, but first, I recommend you source a handy function I wrote that helps to tidy the outputs from selected functions from the aws.ec2 package.

source("https://bit.ly/2KnkdzV")

I found the list object returned from functions such as describe_images(), describe_instance() and instance_status() very verbose and difficult to work with. The tidy_describe() function is there to clean up the outputs and only return the most important information. The function also implements a pretty_print option which

will cat the output in a table to the screen for a quick overview of the information contained in the object.

Lets use this function to see the output from the describe_images() as a pretty_print. Print the aws_describe object without this handy function at your own peril.

image <- "ami-3b0c205e"

aws_describe <- describe_images(image)

aws_describe %>% tidy_describe(.)

--------------------------------------

Summary

--------------------------------------

imageId : ami-3b0c205e

imageOwnerId : 732690581533

creationDate : 2017-10-17T09:28:45.000Z

name : RStudio-1.1.383_R-3.4.2_Julia-0.6.0_CUDA-8_cuDNN-6_ubuntu-16.04-LTS-64bit

description : Ready to run RStudio + Julia/Python server for statistical computation (www.louisaslett.com). Connect to instance public DNS in web brower (standard port 80), username rstudio and password rstudio

To return as tibble: pretty_print = FALSE

Once we have confirmed that we are happy with the image, we need to save the subnet information as well as the security group information.

s <- describe_subnets()

g <- describe_sgroups()

Now that you have specified those two things, you have all the pieces to spin up the machine of your choice. To have a look at what machines are available, visit the instance type webpage to choose your machine. Warning: choosing big machines with lots of CPU and a ton of RAM can be addictive. Winners know when to stop

In this example I spin up a t2.micro instance, which is part of the free tier from which Amazon provides.

# Launch the instance using appropriate settings

i <- run_instances(image = image,

type = "t2.micro", # <- you might want to change this to something like x1e.32xlarge ($26.688 p/h) if you feeling adventurous

subnet = s[[1]],

sgroup = g[[1]])

Once I have executed the code above, I can check on the instance using instance_status to see if the machine is ready, or describe_instance to get the meta information on the machine such as ip. Again, I use the custom tidy_describe

aws_instance <- describe_instances(i)

aws_instance %>% tidy_describe()

--------------------------------------

Summary

--------------------------------------

ownerId : 748485365675

instanceId : i-007fd9116488691fe

imageId : ami-3b0c205e

instanceType : t2.micro

launchTime : 2018-06-30T13:15:50.000Z

availabilityZone : us-east-2b

privateIpAddress : 172.31.16.198

ipAddress : 18.222.174.186

coreCount : 1

threadsPerCore : 1

To return as tibble: pretty_print = FALSE

aws_status <- instance_status(i)

aws_status %>% tidy_describe()

--------------------------------------

Summary

--------------------------------------

instanceId : i-007fd9116488691fe

availabilityZone : us-east-2b

code : 16

name : running

To return as tibble: pretty_print = FALSE

The final bit of code (which is VERY important when running large instance), is to stop the instance and confirm that it has been terminated:

# Stop and terminate the instances

stop_instances(i[[1]])

terminate_instances(i[[1]])

Final comments

Working with AWS-instances for a while now has really been a game changer in the way I conduct/approach any analytical project. Having the capability to switch on large machines on demand and quickly run any of my analytical scripts opened up new opportunities on what I can do as a consultant who has very limited budget to spend on hardware - also, where will I keep my 96 core 500GB RAM machine once I have scraped enough cash together to actually build such a machine?